OpenAI does not share your data for advertising, marketing, or commercial resale purposes.

ChatGPT is a large language model (LLM) developed by OpenAI and launched in late 2022. It generates human-like responses to user prompts by drawing on patterns from vast training data sourced from across the internet. While it doesn’t "think" or understand like a human, it uses statistical relationships in language to generate context-relevant replies.

Since its unveiling, ChatGPT has reshaped the trajectory of AI development and impacted nearly every major industry. Today, ChatGPT has 400 million weekly users and continues to grow rapidly.

ChatGPT is free and easy to access, which makes it convenient, but also easy to use without considering the risks. Understanding AI’s limitations and how ChatGPT handles data can go a long way towards using it more safely.

How safe is ChatGPT?

ChatGPT is generally safe to use. OpenAI has implemented multiple cybersecurity safeguards to protect user data and reduce the risk of unauthorized access or data leaks. While conversation data is collected to help improve the model, users can opt out of having their chats used for training.

That said, like any emerging technology, ChatGPT hasn’t been without issues. In 2023, a technical glitch briefly exposed snippets of users’ conversations. OpenAI resolved the problem quickly, but as LLMs continue to evolve, privacy-conscious users should remain aware that new risks may emerge over time.

ChatGPT security features

ChatGPT conversations are protected by a wide range of security features, including data encryption, privacy protection, and content moderation.

Here are some ChatGPT security features:

- Privacy controls: Users can opt out of having their data collected for model training purposes, either permanently or via the “Temporary chat” feature, which automatically deletes chat data after 30 days.

- Encryption: Data and chats are encrypted in transit and at rest, meaning they cannot be viewed by unauthorized parties.

- Data security compliance: ChatGPT is designed to comply with data protection regulations, such as the GDPR and CCPA.

- Regular security audits: Independent firms conduct frequent penetration tests to identify vulnerabilities and strengthen overall system security.

- Threat detection team: A dedicated Security Detection and Response Team monitors for suspicious activity and responds to emerging threats.

- AI-specific security: Special security protocols are in place to help prevent hackers from exploiting ChatGPT, aiming to execute malicious commands or grant unauthorized access.

- Content moderation: The model is designed to avoid generating hateful, violent, or illegal content, with automated systems flagging questionable outputs for possible human review.

- AI monitoring and updates: Cybersecurity and privacy measures are continuously refined, with regular system updates to improve protection and ensure data safety.

Does ChatGPT collect your data?

Yes, ChatGPT collects your data. According to OpenAI’s privacy policy, ChatGPT collects personal account data and data about your devices, location, and cookies. ChatGPT also collects “your prompts and other content you upload, such as files, images, and audio” — essentially, anything you type into the prompt box.

By default, OpenAI can use your conversations to help improve future AI models like GPT-5. You can opt out of this data usage if you’re logged into a ChatGPT account by adjusting your settings. But anything you input while you’re logged out may be used for model training.

ChatGPT collects your chat data for two main reasons:

- Improving the performance of its AI models: ChatGPT “learns” by analyzing vast amounts of data, and OpenAI uses conversation data to refine and train future models. Analyzing user prompts and responses helps the system better understand language patterns, fix errors, and improve performance over time.

- Ensuring safety and preventing misuse: Even if you opt out of data being used for training, OpenAI retains your conversations for 30 days to monitor for abuse, such as attempts to generate harmful content or exploit the system. In rare cases, your chat may be viewed by a human reviewer under strict privacy and security controls.

Does ChatGPT share your data?

Yes, ChatGPT may share your data, but only with essential third parties such as cloud infrastructure providers and analytics partners that help operate and maintain the service. Importantly, OpenAI does not share your data for advertising, marketing, or commercial resale purposes.

Although data sharing with third parties is limited, purpose-specific, and protected by contractual safeguards, there’s still inherent risk. As with any online service that processes and stores user data, it could be exposed in the event of a data breach or technical failure.

Risks of using ChatGPT

While ChatGPT can be a powerful tool, it’s not without risks. From privacy concerns and misinformation to potential misuse by bad actors, users should understand the limitations and vulnerabilities that come with AI-generated content, especially when relying on it for sensitive tasks or decision-making.

The risks to direct users

Here are some of the ChatGPT security and privacy risks to be aware of if you use it:

- Data leaks: ChatGPT has suffered data breaches and leaks in the past. While there’s nothing to suggest that customer or partner information was compromised, any cloud-based service carries a level of risk of future breaches that could expose user conversations or metadata.

- Human vulnerabilities: In limited cases, authorized OpenAI staff or affiliated third-party personnel may access user data to investigate abuse or ensure system stability. If those individuals abuse their authority or are targeted through social engineering, user privacy could be compromised.

- Fake ChatGPT apps: Some cybercriminals exploit ChatGPT’s popularity by creating fake apps that mimic its interface and name. These malicious copies may request invasive permissions, install spyware, or steal login credentials.

- AI model exploitation: Hackers may attempt to manipulate ChatGPT with complex prompts designed to bypass safety systems. OpenAI has implemented multiple layers of defense to combat prompt injection attacks, but no system is fully immune to advanced exploits.

General risks of ChatGPT and AI tools

AI tools like ChatGPT introduce security and privacy risks, not just for users, but for the broader public as well.

Some of these new AI threats include:

- Social engineering attacks: Scammers can use ChatGPT to mass produce deceptive emails, text messages, and other forms of malicious communication that can be very convincing. They can use these materials to execute phishing attacks and other manipulative tricks.

- Deepfakes: Hackers can use AI to generate synthetic images, videos, and vocal audio that imitate a real person. These “deepfakes” are convincing digital doubles that can trick you into giving up your data.

- Inaccuracies and misinformation: Because ChatGPT doesn’t “know” anything, it may generate content that sounds accurate but isn’t. Such “hallucinations” are inherent to how LLMs work, making fact-checking essential, especially when using them as a research or decision-making assistant.

- AI bias: ChatGPT is trained on huge sets of data, but these sets are not always representative of reality. Incomplete data can lead to bias in ChatGPT’s output. ChatGPT has been found to show bias regarding gender, race, and religion in its replies.

Do the risks outweigh all the benefits?

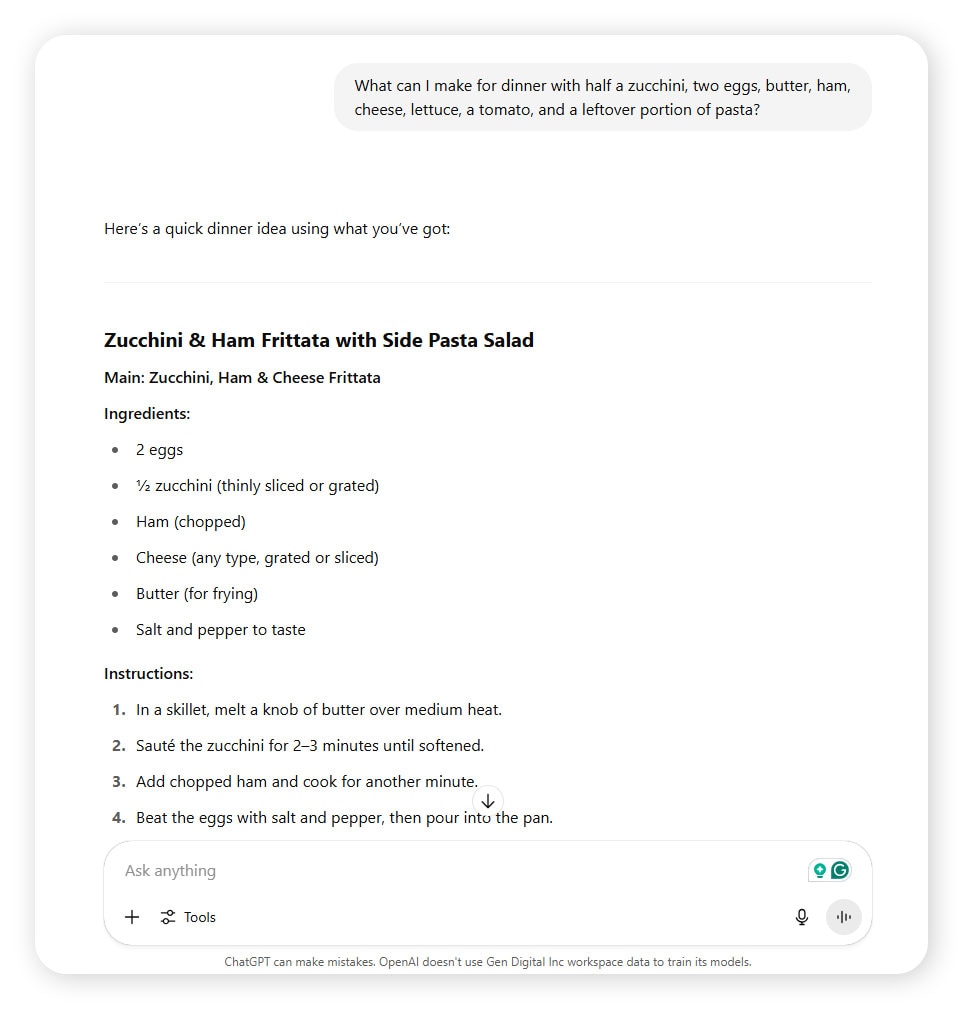

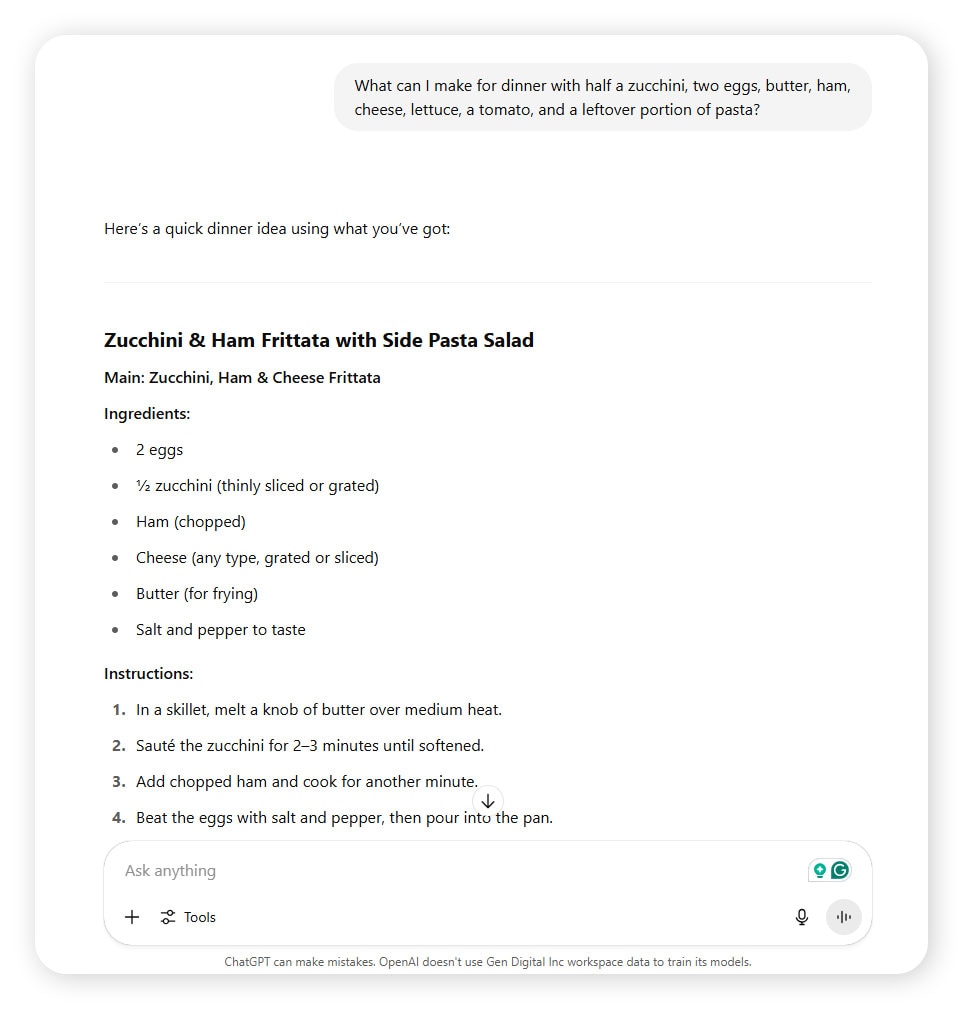

While there are real risks associated with it, ChatGPT offers substantial benefits thanks to its ability to interpret language, generate content, and identify patterns quickly. As an advanced AI assistant, it can streamline a wide range of everyday and specialized tasks.

For example, ChatGPT can review a complex legal document (like an auto loan agreement) in seconds and highlight missing or unclear terms. Or it can suggest creative recipes based on whatever ingredients you have on hand.

Today, people are increasingly relying on ChatGPT for:

- Writing support, from brainstorming ideas to editing drafts.

- Summarizing content such as articles, emails, or reports.

- Translating text across dozens of languages.

- Coding and tech assistance, including debugging, scripting, and documentation.

- Boosting productivity by organizing tasks, setting reminders, or drafting messages.

- Interpreting contracts by simplifying complex legal or financial language.

For many, the benefits far outweigh the risks. The key is understanding how to use ChatGPT responsibly: avoid sharing sensitive data, double-check its output, and stay informed about how it works behind the scenes. When used with care, it can be a powerful, time-saving tool.

How to safely use ChatGPT

To use ChatGPT safely, avoid sharing personally identifying information in conversations, only use the official ChatGPT app, and enable two-factor authentication on your account.

Follow these AI security best practices to stay safe using ChatGPT:

- Regularly review OpenAI’s privacy policy: OpenAI changes the ChatGPT privacy policy from time to time. Read it whenever it’s updated to understand how your data is being stored and used.

- Avoid sharing sensitive information: Don’t share anything on ChatGPT that you don’t want another human to read. Don’t share anything that could reveal your passwords, account details, location, or any other sensitive info.

- Only use the official ChatGPT app: Download the official ChatGPT app from Google Play or the Apple App Store. Or, access it via a web browser at https://chatgpt.com.

- Connect to a secure network: Using ChatGPT while connected to unsecured public Wi-Fi makes it easier for hackers to intercept your login details and chats. Use the app only when connected to a private network, or enable a VPN before you log in.

- Enable two-factor authentication (2FA): 2FA keeps your ChatGPT account safer by requiring two methods of authentication to log in, such as your password and a one-time code.

- Check for permissions on ChatGPT plugins: ChatGPT has countless plugins created by third parties. Always check the permissions they request before allowing them to access your account.

- Update ChatGPT regularly: ChatGPT is updated regularly (often every few days) to improve its performance and security. Update the app frequently to stay safer and enjoy new features.

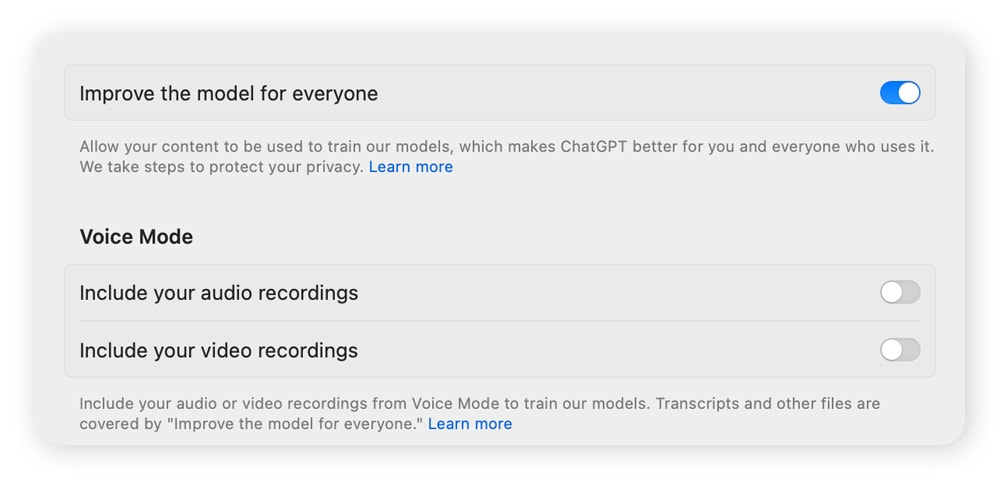

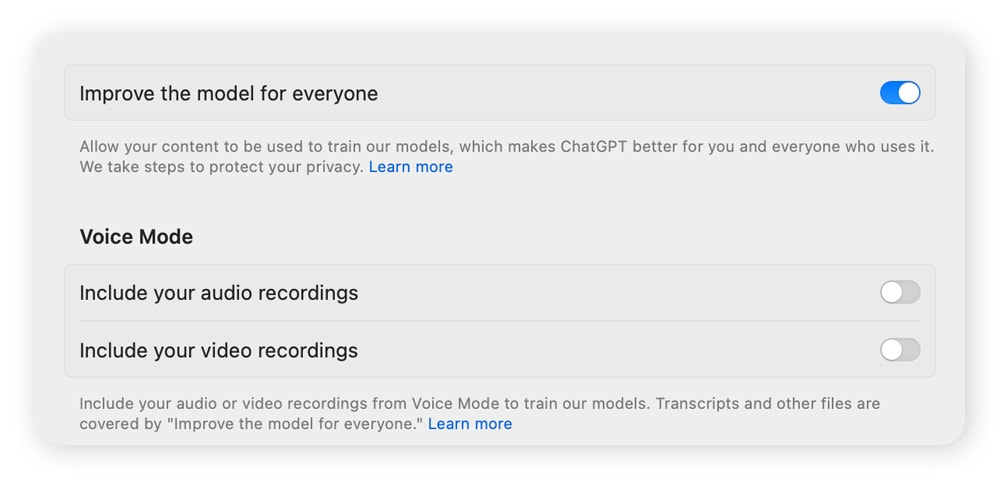

- Stop your content being used for training: In your ChatGPT profile, go to “Settings” > “Data controls” > turn off “Improve the model for everyone.” This will stop ChatGPT from using your conversations to improve future models.

Keep your data safer with LifeLock

ChatGPT is generally safe to use, but that doesn’t mean it’s immune to risk. OpenAI collects your personal info and conversation data, including your prompts. And, it shares some data with its third-party partners. Anywhere your data is stored online could potentially be vulnerable to a leak or breach.

To keep yourself safer from the fallout of these events, get identity theft protection from Lifelock. We constantly scour the web for exposed personal data and alert you if we find it to help you safeguard your accounts and identity. And, should the worst occur, our dedicated U.S.-based restoration specialists are here to help you recover, so you’re never left to face it alone.

FAQs

How do I know if I’m on the real ChatGPT page?

You can tell if you’re on the real ChatGPT page by checking the URL (website name) at the top of your screen. The official ChatGPT website is chatgpt.com, and all real ChatGPT pages begin with this URL.

Is ChatGPT safe to download?

Yes, the official ChatGPT app is safe when downloaded from trusted sources. Look for it on the Apple App Store or Google Play Store, and confirm that the developer is listed as OpenAI. Avoid third-party app stores, which may host fake or malicious versions.

Is ChatGPT free to use?

Yes, ChatGPT is free to use. Anyone can use it with or without a ChatGPT account by visiting chatgpt.com in their browser. OpenAI also offers paid plans that include access to more advanced features and newer models, but the core experience remains free.

ChatGPT is a trademark of OpenAI.

Editor’s note: Our articles provide educational information. LifeLock offerings may not cover or protect against every type of crime, fraud, or threat we write about.

Start your protection,

enroll in minutes.

LifeLock is part of Gen – a global company with a family of trusted brands.

Copyright © 2026 Gen Digital Inc. All rights reserved. Gen trademarks or registered trademarks are property of Gen Digital Inc. or its affiliates. Firefox is a trademark of Mozilla Foundation. Android, Google Chrome, Google Play and the Google Play logo are trademarks of Google, LLC. Mac, iPhone, iPad, Apple and the Apple logo are trademarks of Apple Inc., registered in the U.S. and other countries. App Store is a service mark of Apple Inc. Alexa and all related logos are trademarks of Amazon.com, Inc. or its affiliates. Microsoft and the Window logo are trademarks of Microsoft Corporation in the U.S. and other countries. The Android robot is reproduced or modified from work created and shared by Google and used according to terms described in the Creative Commons 3.0 Attribution License. Other names may be trademarks of their respective owners.