Artificial intelligence (AI) leapt from the pages of science fiction and computer science labs into the mainstream in late 2022 with the launch of ChatGPT. Since then, adoption of generative AI (GenAI) has only continued to grow, with countless apps baking it into their functionality and diverse companies using it to boost productivity.

However, AI programs have also empowered criminals. While some low-level scammers misuse tools like ChatGPT to polish scam emails, most organized cybercriminals favor nefarious AI tools such as FraudGPT and WormGPT. Unlike legitimate platforms, these are built without safeguards, making it easy to generate malware and other illicit content.

This has led to a sharp rise in AI-assisted fraud and identity theft. According to one report, incidents of fraud in the financial sector have risen by over 80% since 2022, and AI reportedly now plays a major role in over 40% of fraudulent activity.

How do attackers use AI for identity theft?

Criminals can use AI at every stage of an identity theft attack — from the first stages of planning to eventual deployment. Cybercriminals can leverage AI to create more technically advanced scams than ever before, like deepfakes, or to scale their social engineering attacks to target larger groups. AI programs could even be trained to execute sophisticated, automated attacks completely independently.

Here are some of the most common ways attackers use AI in identity theft, scams, or fraud.

Photo and video deepfakes

AI can generate realistic photos and videos of real people, from politicians to your family members. These AI imitations are called deepfakes, and they’re often used in scams to gain a target’s trust.

Scammers may create deepfakes to trick people into handing over their personal information or money. For example, a 2024 deepfake of Elon Musk resulted in billions lost to fraud after the fake Musk recommended a scam investment.

Deepfakes are now used in about 1 in 15 cases of fraud attempts in the financial sector — an increase of over 2,100% since GenAI tools gained widespread adoption.

Voice cloning

Voice cloning is when AI is used to imitate someone’s voice. It’s also known as an audio deepfake. Scammers use voice cloning to orchestrate vishing (voice phishing) attacks, often urging their victims to provide sensitive personal information or send cash. It’s estimated that more than 1 in 4 people were targeted by voice cloning scams in 2024 alone.

AI can clone any voice nearly instantly by analyzing just a few seconds of vocal audio, which scammers often find by scraping social media accounts. Then, they can target someone with a convincing vishing call or voice note, imitating a family member, accountant, or doctor, for example.

AI-written phishing messages

Not all AI-powered scams rely on fake photos, videos, or voices. Scammers can also use AI tools to write convincing phishing messages sent to targets via text, email, or social media.

Traditionally, these messages were often easy to spot based on their strange phrasing, grammatical errors, and misspelled words. But, now, cybercriminals from all over the globe can craft perfectly convincing fake messages that are nearly indistinguishable from authentic ones.

AI also makes it easier for scammers to deploy phishing messages at scale, even scraping details from targets’ social media profiles to personalize them. That means victims may face more attacks that are harder than ever to detect as scams.

Synthetic documents

Synthetic identity theft, when identity thieves combine real stolen information with fake details to create a fictitious identity, isn’t new. But AI can help sophisticated identity thieves make their synthetic identities appear even more real by forging convincing fake bank statements, invoices, ID cards, or even contracts.

Fraudsters can use generative AI tools to create these documents in seconds, with no need for design skills or expensive software. They can then use them in a variety of fraudulent schemes, such as applying for credit or creating new online accounts in your name.

Spyware

Spyware is a type of malware that infiltrates devices to spy on activity. It can secretly install itself on your phone or computer if you click a malicious link in an ad or phishing message, for example. When in place, it might record sensitive personal information you enter online, like your financial account logins or Social Security number, and transmit it to a cybercriminal who can use it to steal your identity.

AI can help cybercriminals develop more advanced spyware that can break through stronger device security systems, more reliably avoid detection, and more accurately target high-value sensitive personal information to steal. These improved capabilities come as the risk of mobile spyware increases, with threats doubling in 2024.

Tips to help defend against AI identity theft

Despite the fact that AI-powered scams are generally harder to detect than their traditional counterparts, there are several key warning signs to look out for. If you’re ever suspicious that you might be a target of AI identity theft or fraud, follow these steps:

- Use AI against itself: AI security is proving to be an effective tool for fighting against AI scams. AI scam detectors like Norton Genie can detect AI-generated messages with over 90% accuracy, helping keep you safer against emerging threats.

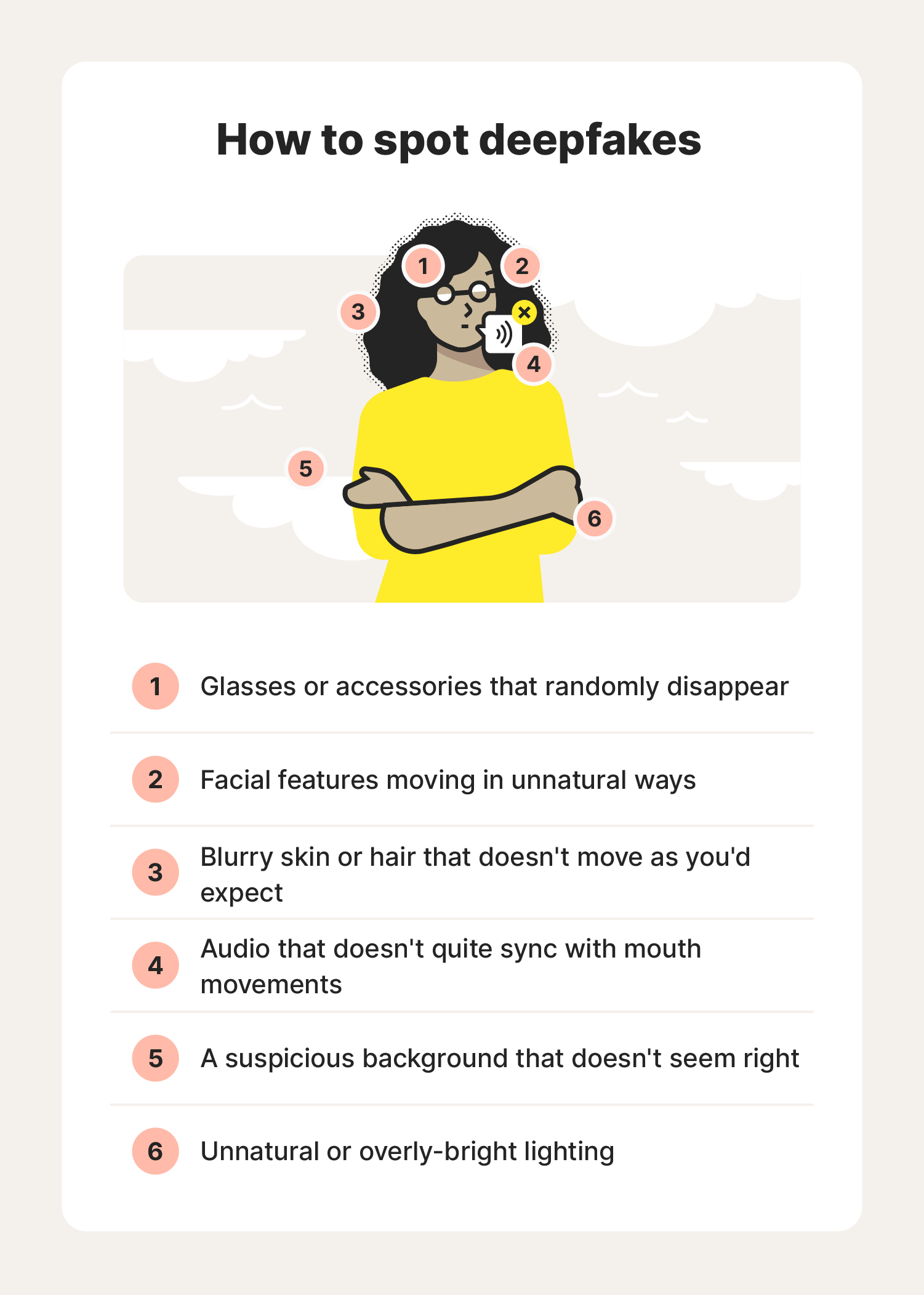

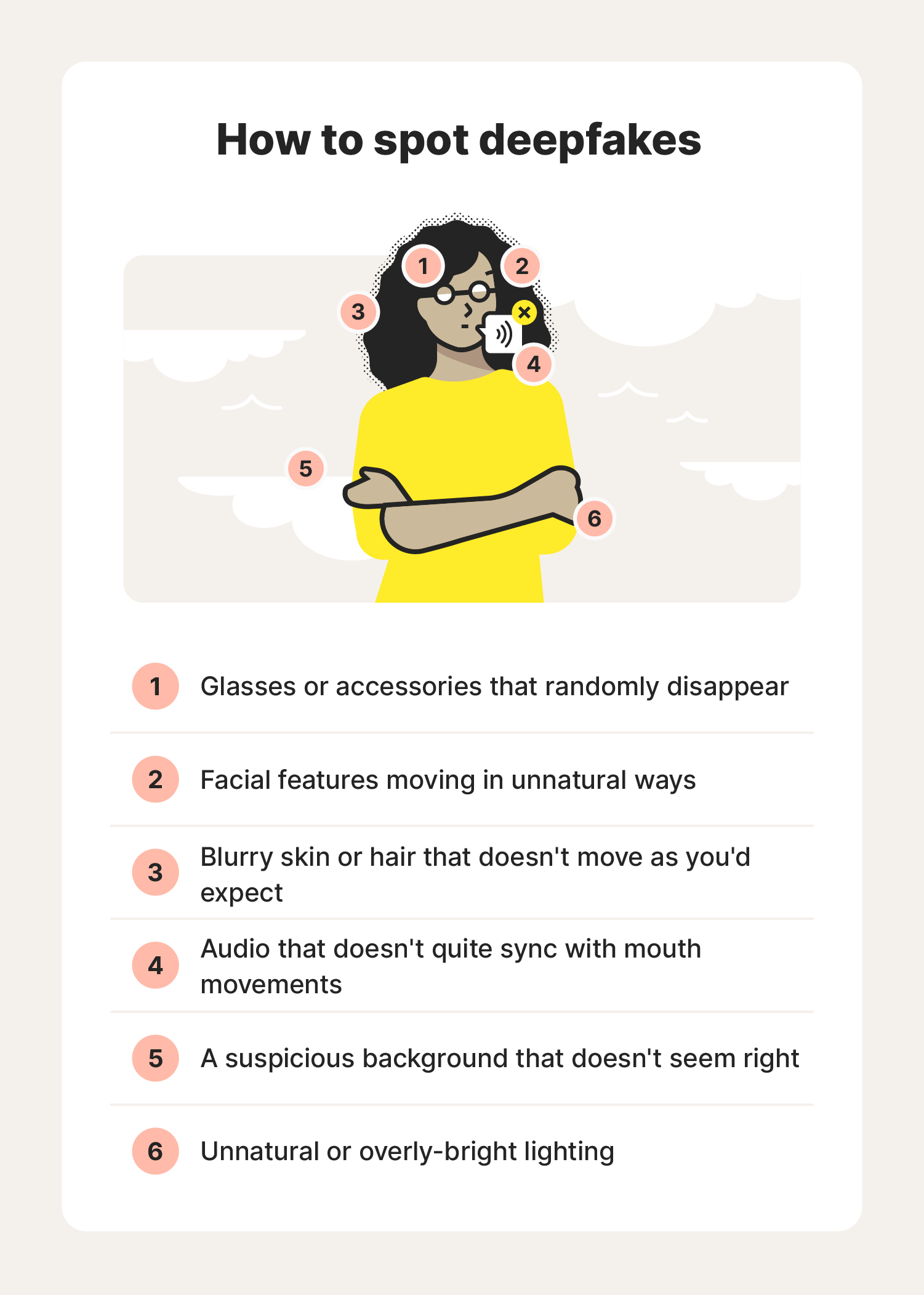

- Search for imperfections in videos: Most deepfakes are not perfect. Look for unnatural blinking, odd lighting, plastic skin texture, or shifting backgrounds.

- Listen for tone and language differences: Voice clones can seem robotic or “off.” Pay attention to unnatural vocal tone and cadence. Audio deepfakes may also use words and phrases that seem out of context.

- Verify caller and sender identities: If you receive a suspicious message from someone you know, contact them via a different channel to confirm their identity (e.g. if they sent you a video on Facebook Messenger, call them directly to confirm it was really them).

- Pay attention to unusual requests: Be wary of urgent or unusual requests sent via video, text, or audio. Scammers commonly request personal information or money, or try to get you to click malicious links.

- Look for unusual ads and app activity: If you receive messages from unknown senders or random pop-up ads on your devices, you might be a target of AI spyware. If you notice apps that you don’t remember downloading, this is a clear sign of infiltration. Running a virus scan can help detect and remove spyware.

As AI scams become more common and harder to spot, investing in identity theft protection can offer valuable peace of mind. Join LifeLock for access to identity theft protection features, including notifications if we detect your personal information on the dark web, or potentially fraudulent applications made in your name.

AI identity theft statistics

AI technology is contributing to a new wave of identity theft and fraud risks. Here are some key AI identity theft statistics that highlight the growing prevalence and sophistication of these threats.

- AI has contributed to a 76% increase in account hijacking attacks, a 105% rise in phone account takeover fraud, and more than a 1,000% rise in SIM swap scams (Which, 2025).

- 60% of consumers said they’d encountered a deepfake video between mid-2023 and mid-2024 (Jumio, 2024).

- Over 40% of fraud in the financial sector is AI-driven, with a total estimated increase of 80% in fraud events since the mainstream launch of GenAI in 2022 (Signicat, 2024).

- An estimated 40% of phishing emails targeting businesses are AI-generated (Vipre, 2024).

- American consumers lost over $12.5 billion to fraud in 2024 — a 25% increase over 2023 (CNET, 2025).

- 48% of Americans think the rise of AI has made them less “scam-savvy” than ever before (BOSS Revolution, 2024).

- AI cybersecurity works: Norton Genie, an AI scam detection tool, was found to detect AI scams with over 90% accuracy (AWS, 2024).

The scale of AI identity theft is possibly even worse than these identity theft stats reveal, as researchers may face challenges identifying and classifying new AI scams. AI is changing cybercrime on a fundamental level and vastly expanding its potential and risk.

What can be done to fight back against fraudsters and cybercriminals empowered by AI? It starts with building your understanding of AI threats and learning what you can do to help safeguard your data, finances, and identity in a new era of cybercrime.

5 real AI identity theft and fraud examples

It should already be clear that AI identity theft and fraud aren’t hypothetical future threats, but real, present risks. If you need more evidence, here are five recent examples of how AI scams are affecting consumers, celebrities, businesses, and more.

1. Elon Musk: the most common victim

AI scammers commonly use deepfakes to imitate influential people and celebrities because they know these videos have the potential to go viral. Elon Musk is a particularly common victim, as the most deepfaked celebrity.

In 2024, scammers combined real interview footage with a deepfake of Musk promoting a crypto token. The video went viral, with some victims losing thousands in the cryptocurrency scam.

2. Alla Morgan: the bad romance

Alla Morgan is not a real person, but she seemed real on video to victim Nikki MacLeod. Alla was a deepfaked personality who formed a romantic connection with MacLeod and convinced her to send over £17,000.

Romance scams like these are likely to become even more common. Scammers can easily ask for money and personal information once they’ve won their victims’ hearts.

3. Sarah Sandlin: the false friend

Last year, a Houston woman, Stacey Svegliato, received a video call from her friend Sara Sandlin asking her to help with a hacked Facebook account. Svegliato tried to help her friend by receiving and sending a series of access codes. But what she didn’t realize was that Sandlin wasn’t really her friend — she was a deepfake — and those access codes were giving hackers access to her Facebook account.

Before long, Svegliato heard that more of her friends were receiving video calls — not from Sandlin, but from herself! The hackers had deepfaked Svegliato and harvested her contacts.

4. Taylor Swift: the cruel cookware

Taylor Swift is another commonly deepfaked celebrity due to her influence on millions of fans. Recently, she was deepfaked in a series of fraudulent ads promoting Le Creuset cookware.

The ads featured the pop icon offering free cookware sets to her fans in exchange for providing their personal information and a small shipping fee. Of course, fans who fell for the fake video never received cookware — but the scammers got access to their financial and personal info.

5. The con-ference call

Last year, a financial worker at a multinational firm logged into a video conference call with several coworkers whom he knew well. They all confirmed the legitimacy of a transfer request for over $25 million that he’d received earlier from the company’s CFO.

Little did he know that not a single one of his colleagues on the conference call was real. They were all deepfakes. This elaborate scam demonstrated the power of AI deepfake technology and the extent to which scammers will go when big money is on the table.

Help protect against AI identity theft risks

AI identity theft and fraud are evolving rapidly. Deepfakes and other AI scams will grow more sophisticated and become harder to detect. Understanding the latest AI threats will help you keep your personal info safer, but investing in identity theft protection can add an extra layer of protection.

As a LifeLock subscriber, you’ll get access to tools that can help you reduce the online exposure of your personal information to minimize the risk of being targeted by a scammer. You’ll also receive alerts if your sensitive data is found leaked on the dark web or used for potential identity theft, so you can take action to help defend your identity and finances.

FAQs

How often are attackers using AI to commit fraud?

Some sources estimate that AI is involved in almost half of all reported fraud incidents, and given the growing availability and adoption of AI tools, it seems likely that this figure will continue to increase. Cybercriminals are actively using AI to launch more convincing scams, create deepfakes, and automate attacks. AI fraud is a current threat — not a future or fringe issue.

How do data brokers contribute to AI-driven identity theft?

Data broker sites may contribute to the risk of AI identity theft by collecting and selling people’s personal information. While much of this trade is legal, some data can be leaked or resold on the dark web. Malicious actors can then use AI to process this raw data, enabling highly targeted social engineering scams.

Are biometrics vulnerable to AI identity theft?

AI tools may be able to spoof biometric data — especially voice and face scans. There’s already evidence that deepfakes and voice clones are capable of breaking these security barriers, and future AI-powered applications might even make it possible for fraudsters to imitate fingerprint biometric data. Researchers are exploring more advanced biometrics, such as vein and heart rate recognition, to fight back against AI forgeries.

Editor’s note: Our articles provide educational information. LifeLock offerings may not cover or protect against every type of crime, fraud, or threat we write about.

Start your protection,

enroll in minutes.

LifeLock is part of Gen – a global company with a family of trusted brands.

Copyright © 2026 Gen Digital Inc. All rights reserved. Gen trademarks or registered trademarks are property of Gen Digital Inc. or its affiliates. Firefox is a trademark of Mozilla Foundation. Android, Google Chrome, Google Play and the Google Play logo are trademarks of Google, LLC. Mac, iPhone, iPad, Apple and the Apple logo are trademarks of Apple Inc., registered in the U.S. and other countries. App Store is a service mark of Apple Inc. Alexa and all related logos are trademarks of Amazon.com, Inc. or its affiliates. Microsoft and the Window logo are trademarks of Microsoft Corporation in the U.S. and other countries. The Android robot is reproduced or modified from work created and shared by Google and used according to terms described in the Creative Commons 3.0 Attribution License. Other names may be trademarks of their respective owners.